Human & Robot Learning (HRL) explores and exploits human and robot adaptation in different granularities and directionalities and may involve human in the control of the robot or human can be loosely coupled to the control system or provide guidance or initial data for learning.

In one extreme, humans serve as the source for skill generation on the robots, which is falls under the general field of Learning from Demonstration (LfD). Here we focus on both sides of the coin. In one human side, we develop scientific and technological methods for probing how humans adapt to robot control and how human effort in teaching robots can be reduced. In the robot side, we develop novel learning architectures that makes efficient use of data provided by the humans.

In the other extreme, robots can serve as teachers or test beds for investigating and improving human behavior. For example, collaborative robots can be used to assess the capacity of humans in exploiting the existing static robot skills for faster and more accurate learning, or how humans would adapt in novel dynamical environments.

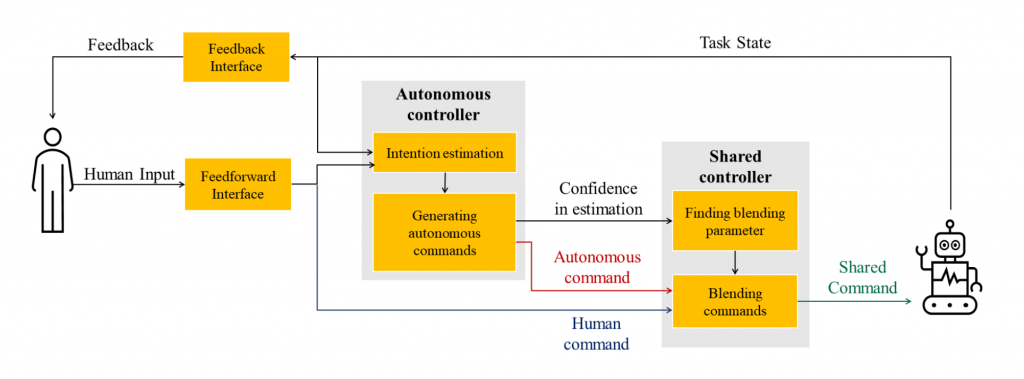

Another theme in our research is Shared Control and Human-robot Co-adaptation. Here the aim is to develop computational mechanism for virtuous learning where human learning would help robot learning and in return robot learning would ease human learning making virtuous cycle. Our research here also looks in to more cognitive aspects of learning. We investigate how robots can build Trust in human partners which should pave the road for Reciprocal Trust between robots and humans.

[1] Aktas H, Nagai Yukie, Asada M, Oztop E, Ugur E (2024) Correspondence learning between morphologically different robots through task demonstrations. IEEE Robotics and Automation Letters 9 (5): 4463-4470. doi: 10.1109/LRA.2024.3382534

[2] Babic J, Kunavar T, Oztop E, Kawato M (2025) Success-Efficient/Failure-Safe Strategy for Hierarchical Reinforcement Motor Learning. PLoS Comput Biol 21(5): e1013089. doi:10.1371/journal.pcbi.1013089

[3] Amirshirzad N, Asada M, Oztop E (2023.8) Context Based Echo State Networks for Robot Movement Primitives. 32nd IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) Busan, South Korea

[4] Akbulut B, Girgin T, Mehrabi A, Asada M, Ugur E, Oztop E (2023.5) Bimanual rope manipulation skill synthesis through context dependent correction policy learning from human demonstration. IEEE International Conference on Robotics and Automation (ICRA2023), London, UK

[5] Celik MB, Ahmetoglu A, Ugur E, Oztop E (2023.11) Developmental Scaffolding with Large Language Models. 23rd IEEE International Conference on Development and Learning (ICDL 2023), Macau, China

Kirtay M, Hafner VV, Asada M, Oztop E (2023) Trust in robot-robot scaffolding. IEEE Transactions on Cognitive and Learning Systems (15)4: 1841-1852. doi: 10.1109/TCDS.2023.3235974

[6] Seker MY, Ahmetoglu A, Nagai Y, Asada M, Oztop E, Ugur E (2022) Imitation and mirror systems in robots through Deep Modality Blending Networks. Neural Networks. 146: 22-35

Amirshirzad N, Asiye K, Oztop E (2019) Human Adaptation to Human-Robot Shared Control. IEEE Transactions on Human-Machine Systems 49(2): 126 – 136, doi: 10.1109/THMS.2018.2884719