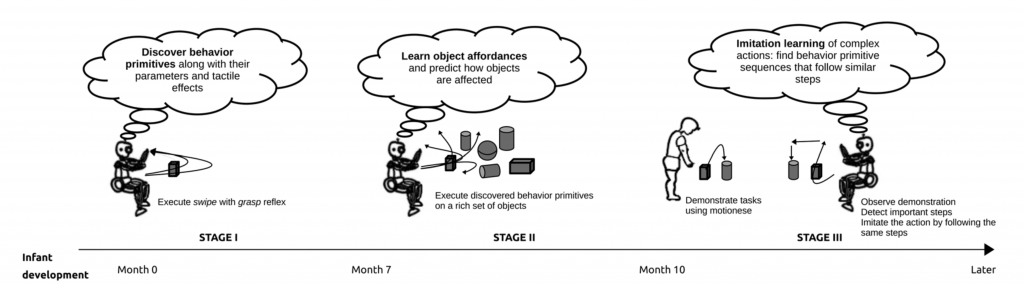

Human-like Lifelong Learning (HLL) aims to develop neural and other learning architectures in which the developed systems mimics selected properties of human behavior and learning to support an efficient lifelong learning regime.

One of the main themes we explore, focuses on how biological brains make effective use of resources, time and energy while learning in a lifelong manner. To this end, we develop models of continual and multi-task learning where the learning agent is not limited to a single task, but can learn over multiple tasks while managing Learning Progress over tasks as well Neural Energy Expenditure. We also investigate online active learning to develop methods for how artificial systems can decide which action to take to minimize their learning effort with no or minimal learning accuracy degradation.

In another line of our research, we explore energy efficient learning architectures from scratch. Notably we develop resource efficient learning systems based on reservoir computing, which is a green alternative to the prominent energy-hungry gradient based deep learning approaches. Our recent focus is on exploiting higher-order interaction between neural units to increase the capacity of reservoir based learning systems.

Another important research we conduct is in symbol formation and neurosymbolic systems. In biological systems the flux of continuous sensorimotor signal are represented in high-level constructs that can be referred to as concepts or symbols (of the brain). We aim to develop systems that can autonomously form such representations and use them for efficient learning and behavior generation. Such models not only help explain how the brain works but also generate techniques for machine learning and robotics, where symbols can be used in classical planning algorithms.

[1] Arditi E, Nagai Y, Ugur E, Asada M, Oztop E (2025.09) Emulating Perceptual Development in Deep Reinforcement Learning. IEEE International Conference on Development and Learning (ICDL2025). Prague, Czech Republic

[2] Ahmetoglu A, Oztop E, Ugur E (2025) Symbolic Manipulation Planning with Discovered Object and Relational Predicates. IEEE Robotics and Automation Letters. doi: 10.1109/LRA.2025.3527338.

[3] Ahmetoglu A, Celik B, Oztop E, Ugur E (2024) “Discovering Predictive Relational Object Symbols with Symbolic Attentive Layers,” in IEEE Robotics and Automation Letters 9(2):1977-1984. doi: 10.1109/LRA.2024.3350994

[4] Celebi B, Asada M, Oztop E (2024.7) Augmenting Reservoirs with Higher Order Terms for Resource Efficient Learning. The International Joint Conference on Neural Networks (IJCNN 2024), Yokohama, Japan

[5] Amirshirzad N, Eren MA, Oztop E (2024) Context-Based Echo State Networks with Prediction Confidence for Human-Robot Shared Control. arXiv preprint arXiv:241200541.

[6] Eren MA, Oztop E (2024) Sample Efficient Robot Learning in Supervised Effect Prediction Tasks. arXiv preprint arXiv:241202331.

[7] Koulaeizadeh, Z, Oztop E (2024) Modulating Reservoir Dynamics via Reinforcement Learning for Efficient Robot Skill Synthesis. arXiv preprint arXiv:2411.10991

[8] Oztop E, Asada M (2022) On the weight and density bounds of polynomial threshold functions. Discrete Mathematics 345(8). doi: 10.1016/j.disc.2022.112912

[9] Say H, Oztop E (2023.12) A Model for Cognitively Valid Lifelong Learning. IEEE International Conference on Robotics and Biomimetics (ROBIO 2023), Koh Samui, Thailand

[10] Ahmetoglu A, Ugur E, Asada M, Oztop E (2022) High-level Features for Resource Economy and Fast Learning in Skill Transfer. Advanced Robotics 1-13