Workshop on orofacial motor control for swallow and vocalization

Date

2015-03-27(Fr) 9:00-18:00

Place

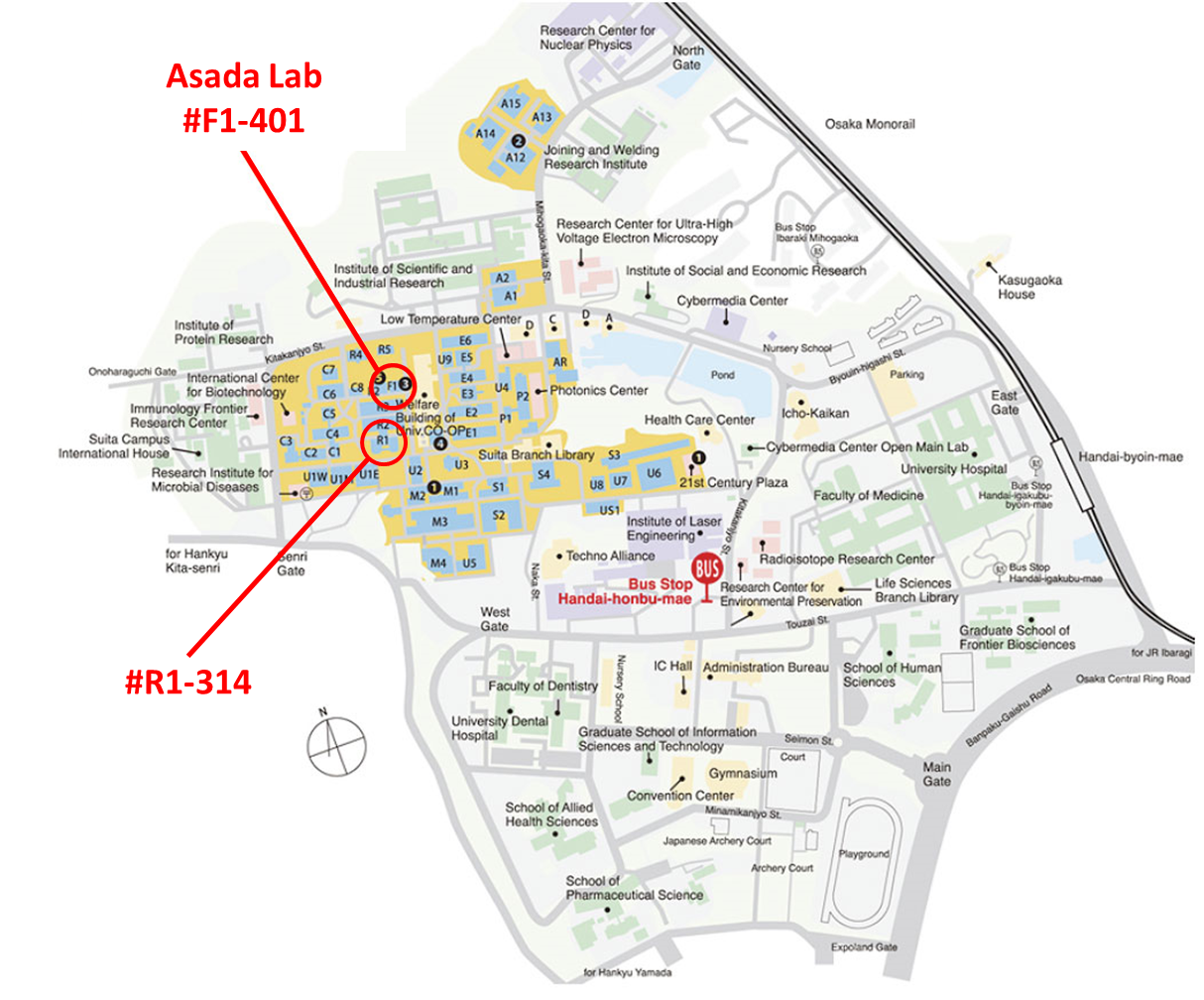

#R1-314, Suita Campus, Osaka University

2-1, Yamadaoka, Suita city, Osaka, Japan

Program overview

| Time | Speaker | Title |

|---|---|---|

| 9:00-9:40 | Minoru Asada | “Towards Constructive Orofacial Science ~ Starting from Early development of vocalization through infant-caregiver interactions ~” |

| 9:40-10:20 | Ian S. Howard | “A computational model of an infant that learns to pronounce through caregiver interactions” |

| 10:30-11:10 | Paul Verschure | “Achieving ecologically valid human-robot multi-modal interaction: The Distributed Adaptive Control Cognitive Architecture applied to humanoid robots” |

| 11:10-11:50 | Atsushi Yoshida | “Neuronal Mechanisms Controlling Jaw-Movements in Comparison with Limb-Movements” |

| 11:50-13:00 | Lunch | — |

| 13:00-13:40 | Kazutaka Takahashi | “Involvement of unit spiking activities and local field potentials in the orofacial portion of the primary motor cortex in non-human primates for swallowing during natural feeding behavior” |

| 13:40-14:20 | Tomio Inoue | “Premotoneuronal inputs and dendritic responses in jaw muscle motoneurons” |

| 14:20-15:00 | Takahiro Ono | “Tongue pressure biomechanics in swallowing” |

| 15:00-15:20 | Break | — |

| 15:20-16:20 | Yoshihiro Ohashi, Fumiko Oshima | “Dysphasia diagnosis and management for stroke and Parkinson’s disease. Effect of transcranial direct current stimulation (tDCS) on swallowing phase in post-stroke dysphagia” |

| 16:20-17:00 | Nobutsuna Endo | “A robotics approach on vocalization” |

| 17:10-18:00 | @ Asada Lab. #F1-401 | Robot demonstration |

Detail

- Minoru Asada

- Department of Adaptive Machine Systems, Graduate School of Engineering, Osaka University, Japan.

- “Towards Constructive Orofacial Science ~ Starting from Early development of vocalization through infant-caregiver interactions ~”

- Orofacial motor control for swallow and vocalization has not been one of the central topics in neuroscience and the related disciplines yet since it seems difficult to address the issue though it is definitely a serious one for humans to survive, that is, eating, breathing, vocalizing, and so on. In this workshop, we intend to reveal the new horizons of this issue through an idea of constructive approaches such as computer simulations and real robots in addition to the current approaches. In my talk, I will touch the issue of early development of vocalization through infant-caregiver interactions as one of the topics of the workshop. I review the early development of infant speech perception and articulation from observational studies in developmental psychology and neuroscientific imaging studies. Next, computational modeling approaches are explained. Then, constructive approaches with real robot experiments and computer simulations are introduced to discuss how infant-caregiver interactions affect the early development of vocalization.

- Ian S. Howard

- Centre for Robotics and Neural Systems, University of Plymouth, PL4 8AA, UK.

- “A computational model of an infant that learns to pronounce through caregiver interactions”

- Almost all theories of infant speech development assume that imitation is the means by which the speech sounds that make up words are learned. However, there is accumulating evidence to support an alternative account of how this systemic aspect of pronunciation develops. Here we test this non-imitative mechanism, using Elija, a computational model of an infant.

Elija begins by first teaching himself basic speech sounds using unsupervised active learning to discover potential vocal motor patterns that lead to distinct motor patterns and sensory consequences. As babbling commences, interaction with a caregiver further shapes his vocal development. To capture this process, separate interaction experiments were run, using native speakers of English, French and German playing the role of caregiver.

We analysed the interactions between Elija and the caregivers based on phonemic transcriptions of the caregivers’ utterances. We show that during the reformulation process, caregivers were biased to interpret Elija’s output within the framework of their native languages, but also in terms of their own individual preferences.

In the final stage of the experiment, we show that each caregiver was able to teach Elija to pronounce some simple words by the serial imitation of their component sounds.

- Paul Verschure

- Synthetic Perceptive, Emotive and Cognitive Systems laboratory, Center of Autonomous Systems and Neurorobotics Universitat Pompeu Fabra & Catalan Institute of Advanced Studies – ICREA

- “Achieving ecologically valid human-robot multi-modal interaction: The Distributed Adaptive Control Cognitive Architecture applied to humanoid robots”

- Intelligent artifacts and robots are expected to operate in complex physical and social environments. Whereas robots are slowly but surely being readied for the physical world, the social world is still at the horizon. The deployment of service and companion robots, however, requires that humans and robots can understand each other and can communicate. In other words, whereas humans have evolved an advanced codification of their behavior that provides the basis for the transparency of most of their actions and communication, for now robots do not share this behavioral code and cannot explain their actions to human users hampering their collaboration. Here I will describe a number of projects that are underway to advance a cognitive architecture for dyadic human robot interaction introducing a new transparency in

human robot interaction by allowing robots to both understand their own actions and those of humans, and to interpret and communicate these in human compatible intentional terms expressed as a language-like communication channel we call Robotese. - Atsushi Yoshida

- Department of Oral Anatomy and Neurobiology, Osaka University Graduate School of Dentistry, Japan.

- “Neuronal Mechanisms Controlling Jaw-Movements in Comparison with Limb-Movements”

- The sound jaw-movements are important for high Quality of Life especially in an aging society. Jaw-movements are generated by contraction of jaw-closing (JC) muscles and their antagonistic jaw-opening muscles. We have studied the neuronal mechanisms controlling jaw-movements in-vivo cats. In my talk, I will focus on how single JC motoneurons are controlled by excitatory or inhibitory inputs in comparison with limb motoneurons. We found many excitatory and inhibitory axon terminals synapsing the soma and dendrites of intracellularly labeled single JC motoneurons under electron microscopy. The number of excitatory and inhibitory synapses was slightly larger than that on the limb motoneurons. Both the number of excitatory contacts between single trigeminal mesencephalic afferents and their target single JC motoneurons and the number of inhibitory contacts between single trigeminal oral subnucleus neurons and their target single JC motoneurons were respectively smaller than the numbers of excitatory and inhibitory contacts on limb motoneurons. Further, we found a new mechanism in motor control that single excitatory premotoneurons excite a smaller number of JC motoneurons, while single inhibitory premotoneurons inhibit a larger number of JC motoneurons. Our data indicates neural mechanisms which can produce more delicate muscle control in jaw-movements compared to the limb-movements.

- Kazutaka Takahashi

- Department of Organismal Biology and Anatomy, University of Chicago.

- “Involvement of unit spiking activities and local field potentials in the orofacial portion of the primary motor cortex in non-human primates for swallowing during natural feeding behavior”

- We have been using a non-human primate model to study cortical involvement on orofacial motor control, particularly swallowing. We trained macaque monkeys to eat while seated on a primate chair and 3D jaw kinematics and 2D tongue kinematics were recorded. In this study, we specifically focused on the transitions from rhythmic chew cycles to swallowing. We recorded intracortical signals, both unit spiking activities and local field potentials (LFPs) from the orofacial area of primary motor cortex (MIo) with a chronically implanted high-density multi-channel array.

We first analyzed LFPs and found that power in the beta oscillation range increased prior to transitions from chewing to swallowing. Second, we analyzed spiking activities using a few methods. Population spiking contains information about jaw and tongue kinematics as well as behavioral state changes. Finally, a Granger causality model was used to estimate their functional connectivity during transitions between chewing cycles and during chew-to-swallow transitions. We found that during rhythmic chewing, the network was dominated by excitatory connections, while during transitions from rhythmic chews to swallows the numbers of excitatory and inhibitory connections became comparable. These results suggest that networks of neurons in MIo change their operative states with changes in kinematically defined behavioral states.

- Tomio Inoue

- Department of Oral Physiology, Showa University School of Dentistry, Tokyo, Japan.

- “Premotoneuronal inputs and dendritic responses in jaw muscle motoneurons”

- Feeding is one of the most important survival functions for mammals. To understand neural mechanisms underlying jaw motor function during feeding, we examined electrophysiological and morphological properties of premotor neurons targeting jaw-muscle motoneurons, and postsynaptic responses in jaw-muscle motoneurons in brainstem slice preparations obtained from P1-12 neonatal rats using whole-cell recordings and laser photolysis of caged glutamate. Premotor neurons were detected on the basis of antidromic responses to stimulation of the trigeminal motor nucleus (MoV) using Ca2+ imaging, which were divided into 2 groups: those firing at higher (HF neurons) or lower (LF neurons) frequency. Intracellular labeling revealed that the morphologies of axons and dendrites of HF neurons were different from those of the LF neurons. Laser uncaging of glutamate in the area where the premotor neurons were located induced postsynaptic responses in the motoneurons. Focal uncaging of glutamate in the dendrite of a jaw-closing motoneuron evoked a dendritic NMDA spike. These results suggest that the premotor neurons targeting the MoV with different firing properties have different dendritic and axonal morphologies, and dendritic NMDA spikes may contribute to boosting of postsynaptic responses. These premotor neuron classes and dendritic properties of motoneurons may play distinctive roles in suckling and chewing.

- Takahiro Ono

- Division of Comprehensive Prosthodontics, Niigata University Graduate School of Medical and Dental Sciences.

- “Tongue pressure biomechanics in swallowing”

- Swallowing (deglutition) is the most highly complicated physical action, which is performed under the sequential coordination among oral, pharyngeal and esophageal muscles’ contraction. Intra-lumenal pressure flow dynamics through the oral cavity and pharynx is the key of biomechanics for transferring the bolus to be swallowed.

We have developed monitoring system for measuring tongue pressure against hard palate and laryngeal movement with original sensors. Tongue pressure is measured by using “Swallow Scan” system with an ultra-thin sensor sheet, which can analyze the sequential and quantitative order of pressure production among five measuring points. Pathological changes in such biomechanical parameters are closely related with the incidence of dysphagia in patients with tongue-motor deficits. On the other hand, dysphagia can be improved by adjusting tongue pressure production with an oral appliance (palatal augmentation prosthesis).

Our system can be expected to be a clinical diagnostic tool for dysphagia rehabilitation as well as an in vivo monitoring device of food texture. Possibility of application to human robotics will be discussed in this workshop. - Yoshihiro Ohashi, Fumiko Oshima

- Japanese red cross kyoto daiichi hospital

- “Dysphasia diagnosis and management for stroke and Parkinson’s disease. Effect of transcranial direct current stimulation (tDCS) on swallowing phase in post-stroke dysphagia”

- Dysphagia is a frequent symptom complicated with stroke and neurodegenerative diseases such as Parkinson’s disease. It is often associated with considerably high morbidity and mortality due to aspiration pneumonia and malnutrition.

Videofluoroscopic swallow study (VFSS) is a useful tool to evaluate swallowing physiology and provides valuable information for intervention: i.e., rehabilitative therapy (swallowing training), operation (cricopharyngeal sphincterotomy, et al.) and pharmacological therapies. In clinical settings, a multidisciplinary approach is critical for diagnosis and management of dysphagia.

In recent decades, non-invasive brain stimulation has been reported to enhance functional recovery by inducing brain plasticity in post-stroke patients. Some studies revealed that transcranial direct current stimulation (tDCS) given over pharyngeal area of the primary motor cortex (M1) has promoted recovery of swallowing dysfunction in post-stroke patients. We experienced a chronic post-stroke patient, who showed intractable dysphagia with enteral nutrition due to multiple cerebral infarctions. After the tDCS intervention, VFSS revealed a shortening of the oral transit time during fluid intake, of the swallowing reaction time during jelly and fluid intake, compared to those of pre-intervention. It suggests that tDCS over the M1 pharyngeal area in combination with swallowing training may improve coordinated movements of oral and pharyngeal organs in post-stroke patients. - Nobutsuna Endo

- Department of Adaptive Machine Systems, Graduate School of Engineering, Osaka University, Japan.

- “A robotics approach on vocalization”

- Several observational studies have suggested that the vocal development of an infant towards language acquisition is by interaction with caregivers. However, owing to the difficulty of controlling infant vocalization, the underlying mechanisms of the process and how they are affected by the behavior of the caregiver are yet to be clearly established. Towards clarifying these outstanding issues, we developed an infant-like vocal robot, “Lingua,” as a controllable vocal platform that affords a model of real infant vocalization. Lingua has two features, namely, infant-like voice and high articulation capability. The shapes of its vocal cords and vocal tract are similar to those of a 6-month-old infant as determined from anatomical data. Seven degrees of freedom of tongue articulation were realized by a sophisticated design comprising linkage mechanisms inside a miniaturized vocal tract, and this enabled the achievement of high articulation performance. The relationship between the material hardness of the vocal fold and its acoustic performance was examined, and the results of preliminary experiments showed that the robot could vocalize almost the same ranges of fundamental frequencies and vowel-like utterances as an infant.

Contact

- Nobutsuna ENDO, Ph.D.

- Specially-appointed researcher, Asada Lab., Department of Adaptive Machine Systems, Graduate School of Engineering, Osaka University

- endo[at]ams.eng.osaka-u.ac.jp