Invited Talk 3

Thursday, July 21, 9:00-9:50 (International Conference Hall)

Brain-Body-Environment Interactions in Cognition

Toshio Inui

Kyoto University, Japan

Abstract

1. The visual

world and estimation of the outer world

The visual world is estimated from retinal images and represented in

the brain. We thus conduct ourselves in our own world. In order to behave successfully

in such a visual world in the brain, the world must be stable and sensitive

to various changes. In addition, when dealing with moving objects, we must be

able to predict states beforehand. We can therefore control our own actions;

and our visual world must also be appropriate for this purpose. In actual fact,

we take advantage of such control using predictions when we control our own

body movements.

2. Two visual systems and embodied cognition

The study published by Goodale in 1991 has exerted a great deal of

influence on the brain and cognitive sciences. He discovered that two functions

are generated by different pathways in the brain; one is the function for object

manipulation; the other, for object recognition. Neural activities from the

occipital lobe to the parietal lobe (the dorsal system) are responsible for

the utilization of knowledge about how to use objects, known as procedural or

functional knowledge. Conversely, activities from the occipital lobe to the

temporal lobe (the ventral system) are essential to utilizing knowledge about

the classification of shapes or naming of objects.

The dorsal system is involved in processing information about how

to use objects, and is a “perception for action system”. When we

try to drink from a glass of water in front of us, we reach out our arm and

grasp the glass. To achieve this, we must accurately gauge the spatial relationship

between our hand and the object (e.g., the position of the glass compared to

the center of our body). We must also realize the appropriate plan to move our

hand and arm. Nevertheless, such action is typically completed without any difficulties

whatsoever. We seem to follow the requisite procedure automatically and unconsciously

at the same time as looking at the glass. Therefore it can be said that we perceive

an object not only with vision, but also with our body. Such a cognitive function

is called “embodied cognition”, and results from good coordination

between vision and motor abilities, based on close communication among the occipital

and parietal lobes and the motor system.

The function of such a mechanism of coordination brings about not

only the transformation of visual information into motor information, but also

the generation of visual images from a motor data set. Thanks to smooth execution

of these bidirectional processing, we perform various mental simulations in

the brain. In fact such mental simulation is extremely important for the function

of the mind. Furthermore, a similar mechanism is considered to exist between

auditory perception and articulation. As we are capable of learning through

imitation with visuo-motor coordination, we can also learn articulation with

auditory-motor coordination. This is important in learning a second language,

as well as the native language.

3. Brain-body-environment

We skillfully perform various manipulations of the environment or information

obtained from the environment. Most of these manipulations go succeed with predictive

processing of information. Assessing whether a manipulation will result in the

expected state is prerequisite for predictive processing or predictive control.

We therefore receive sensory feedback from the environment or body and compare

this with the predicted state. This indicates the importance of both visual

and somatosensory information, including tactile information. Merleau-Ponty

compared relationships between humans and the environment as described above

to exchanges between a subject and an object, or exchanges between something

that touches and something that is touched. Complex interrelations should develop

in such situations, rather than a simple and static relationship between the

subject and object. Thus, the subject who perceives the environment is considered

to be involved in an inseparable and complex relationship with the environment.

4. Prediction and imitation

The imitation and prediction of actions by other organisms represent the basis

for creating high-level cognitive functions. To imitate the actions or voices

of other people, visual or auditory information must be transformed into self-generated

movements. In the same way as an infant imitates the parents, parents imitate

infants. These interactions are connected through dialogue with gestures. Melzoff

and Gopnik (1993) proposed that the infant extends the cognitive functions through

such “mutual imitation games”. The important problem here is that

an infant understands the equivalence of information from different modalities

(visual information on action by others and motor information by the self).

Another issue involves the mechanisms used to correct error of imitation. Melzoff

and Moore (1977) suggested a conceptual model for these problems. However, much

more research is needed before a clear understand of this topic is achieved.

The mirror neuron, discovered by Rizzolatti et al. (1996), is clearly

involved in comprehension of the actions of others. Moreover, Arbib and Rizzolatti

(1996) have suggested that the transformation from visual information to movement

is realized in the pathway from STS to F5, whereas the transformation from movement

to visual information is conducted in the pathway from F5 to STS. They proposed

that these pathways play important roles in comprehending the gestures of others.

5. Investigating neural correlations of state estimation

in visually guided movements

State estimation of self-movement, which is based on both motor commands and

sensory feedback, has been suggested as the essence of human movement control

to compensate for inherent feedback delays in sensorimotor loops. We have investigated

the neural basis for state estimation of human movement (Ogawa et al., 2005)

using event-related functional magnetic resonance imaging (fMRI).

Participants performed visually guided movements with an artificial

delay introduced into actual visual feedback during movement. As movement control

based purely on feedback information is impaired under delayed visual feedback

conditions, prediction of self-movement was considered likely to be used to

maintain relatively accurate performance in the presence of feedback delays.

Such internal state estimation was then compared with actual feedback information,

and prediction error was used to optimize the estimated state. This study investigated

the neural basis of state estimation and prediction errors of self-movement

by analyzing correlations between motor performance and brain activity under

conditions of delayed visual feedback. Behavioral data were also measured during

the task and used as explanatory variables in analysis of event-related fMRI.

Such parametric fMRI analysis based on motor performance of each subject could

reveal relationships between motor performance and brain activity.

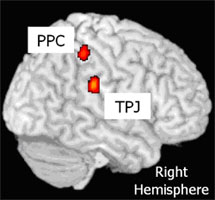

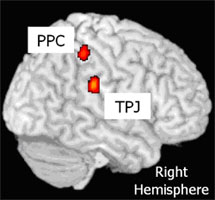

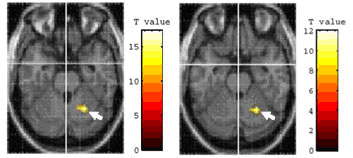

Experiment 1 investigated brain activations that were significantly

correlated with visual feedback delay and motor error by parametrically manipulating

visual feedback delay. Activation of the right posterior parietal cortex (PPC)

was positively correlated with motor error, whereas activation of the right

tempo-parietal junction (TPJ) was observed only in the group with a smaller

increase in motor error following increased visual feedback delay. Experiment

2 involved parametric analysis of motor performance while controlling computer

mouse movement speed during the task. Activity in the right TPJ displayed a

significant positive correlation with motor performance under delayed visual

feedback conditions. In addition, activity of the PPC was greater when motor

error was presented visually (Fig. 1).

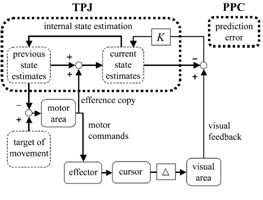

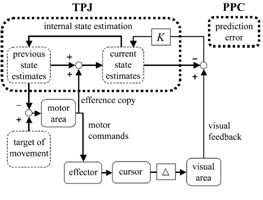

The relationship between activated regions in this study and the

predictive control model are shown schematically in Figure 2. First, a motor

control model is generated in the central nervous system (CNS) and sent to the

effector. Actual movement is then generated and visual feedback is received

as the cursor position on a display. Simultaneously, the efference copy of motor

commands is sent to the CNS, which internally estimates self-movement using

the copy and previous state estimates as inputs. State estimates are used to

generate the next motor commands in order to compensate for external feedback

delay (?). Finally, state estimates are compared with actual feedback information,

and prediction error is used to optimize state estimates, multiplied by the

appropriate observer gain (K). The current results suggest that the PPC is involved

in such a prediction error with regard to visual coordinates, and that the TPJ

is involved in state estimation of movements.

6. Tool-use, pantomiming and imagination

Neuropsychological studies have shown that some patients with ideomotor apraxia

(IMA), typically due to left parietal lesions, are unable to pantomime with

tools, despite the ability to manipulate the actual tools normally (Rapcsak

et al., 1995; De Renzu and Lucchelli, 1988). This finding suggests that the

neural mechanism for manipulating tools in everyday life differs from that for

pantomiming tool use.

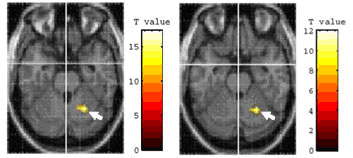

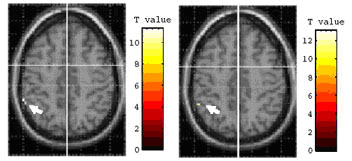

We examined the neural basis underlying tool-use behavior from the

perspective that use of a familiar tool involves a specific pathway that generates

actions without explicit retrieval of intended actions (Imazu et al., 2005).

Regional cerebral blood flow in healthy Japanese subjects was measured using

fMRI while the subject picked up an object with chopsticks, picked up an object

with the hand, pantomimed the use of chopsticks, imagined the use of chopsticks,

and imagined use of the hand. When comparing activations while picking up an

object using chopsticks with activations while imagining the use of chopsticks

(TI), bilateral areas of the cerebellum and the primary motor area were significantly

activated (Fig. 3a).

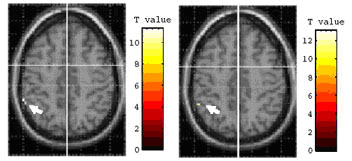

Using inverse contrast, TI versus TU, the left inferior parietal

lobule (IPL) was significantly activated (Fig. 3b). Comparison of TU and pantomiming

the use of chopsticks (Tp) revealed significant activation in the lateral right

cerebellum and bilateral postcentral gyri (Fig. 3a). Using inverse contrast,

Tp versus TU, significant activation in the left inferior parietal lobule was

observed (Fig. 3b).

Given these results, the left IPL appears to selectively contribute to tasks

requiring explicit retrieval of tool-related hand movement, and pantomiming

and imagining tool use. Furthermore, a comparison of these tasks revealed that

activation in the lateral part of the right cerebellum increased during execution

of tool-use. The present findings support our speculation that actual use of

a familiar tool involves a different pathway from that of the mental imagery

process.

Acknowledgment

This study was supported by (1) the 21st Century COE Program, (2) the Advanced

and Innovational Research Program in Life Sciences from Ministry of Education,

Culture, Sports, Science and Technology, the Japanese Government, and (3) the

Research for the Future Program administered by the Japan Society for the Promotion

of Science (JSPS-RFTF99P01401).

References

[1] Arbib, M.A., and Rizzolatti, G. (1996) Neural expectations: a possible evolutionary

path from manual skills to language. Communication and Cognition, 29, 3, 393-424.

[2] De Renzi, E., and Luchelli, F. (1988) Ideational apraxia. Brain, 111, 1173-1185.

[3] Goodale, M.A., Milner, A.D., Jakobson, L.S., and Carey, D.P. (1991) A neurological

dissociation between perceiving objects and grasping them. Nature, 349(6305),

154-156.

[4] Imazu, S., Sugio., T and Tanaka., S and Inui, T. (2005) The difference between

actual usage of chopsticks and imagining of chopsticks use: an event-related

fMRI study. Cortex. in press.

[5] Meltzoff, A., and Gopnik, A. (1993) The role of imitation in understanding

persons and developing a theory of mind. In Baron-Cohen, S., Tager-Flusberg,

H. and Cohen, D.J. (Eds.), Understanding other minds, 335-366. New York: Oxford

Medical Publications.

[6] Meltzoff, A.N., and Moore, M.K. (1997) Explaining facial imitation: A theoretical

model. Early Development and Parenting, 6, 179-192.

[7] Ogawa, K., Inui, T., and Sugio, T. (2005) Neural correlates of state estimation

in visually guided movements: an event-related fMRI study. Cortex. in press.

[8] Rapcsak, S.Z., Ochipa, C., Anderson, K.C., and Poizner, H. (1995) Progressive

ideomotor apraxia, evidence for a selective impairment in the action production

system. Brain and Cognition, 27, 213-36.

[9] Rizzolatti, G., Fadiga, L., Gallese, V., and Fogassi, L. (1996) Premotor

cortex and the recognition of motor actions. Cognitive Brain Research, 3, 131-141.

|

|

||

|

Figure.1 The posterior parietal cortex (PPC) and the temporo-parietal

junction (TPJ) observed in the current study. |

Figure.2 The predictive control model of self-movement and

brain activations observed in the current study. |

||

Z = -26 |

Z = -24 |

Z = 46 |

Z = 46 |

|

|

||

TU > TP (p < 0.05,uncorrected) |

TU > TI (p < 0.05,uncorrected) |

TP > TU (p < 0.05,uncorrected) |

TI > TU (p < 0.05,uncorrected) |

a) The activation in the

right cerebellum |

b) The activation in the

left IPL |

||

Figure. 3 |

|||

Profile

He is now a professor at the Department of Intelligence

Science and Technology, Graduate School of Informatics, Kyoto University. His

majors are cognitive science and computational neuroscience. Presently engaged

in research of visual information processing and the communication mechanism.

He is an executive committee member of the Neuropsychology Association

of Japan, the Japanese Society for Cognitive Psychology, the Japanese Neuro-ophthalmology

Society, and the Japan Human Brain Mapping Society. He serves on the editorial

board of Neural Networks and Japanese Psychological Research. He is also a chair

of Human Communication Group of IEICE (the Institute of Electronics, Information

and Communication Engineers), an academic counselor member of International

Institute for Advanced Studies, and an executive member of Brain Century Promotion

Conference (non profit organization). His publications include “Inui,

T., and McClelland, J. L. (Eds., 1996) Attention and Performance XVI : Information

integration in perception and communication. Cambridge, MA: The MIT Press.”