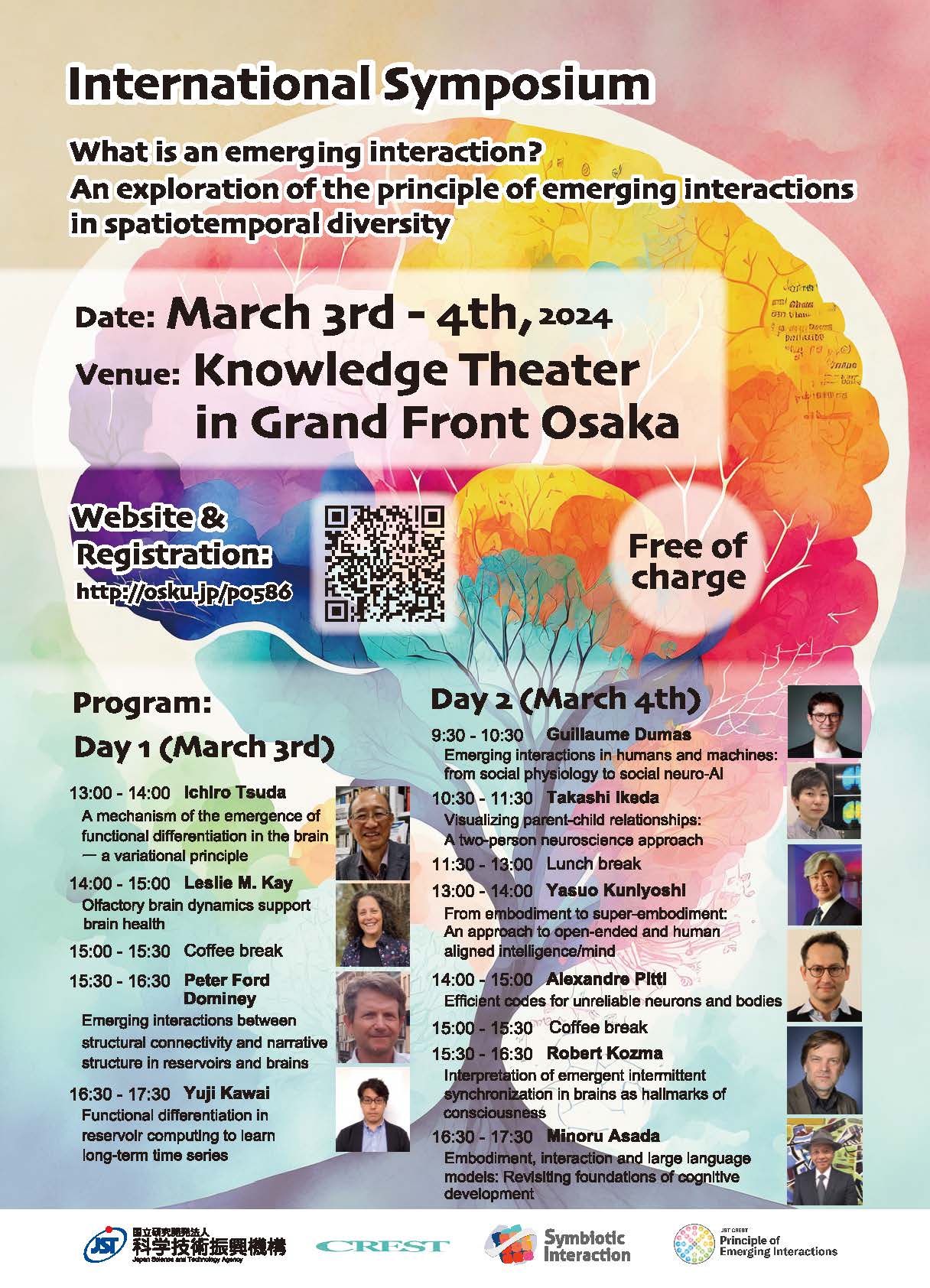

What is an emerging interaction? ― An exploration of the principle of emerging interactions in spatiotemporal diversity

- Date: March 3rd (Sun.) – 4th (Mon.), 2024

- Place: Knowledge Theater in Grand Front Osaka, Japan

- Registration:

Registration page- Registration deadline: February 29th (Sat.)

- Online registration is now closed. If you have not registered yet, please register on-site at the venue.

- Fee: Free of charge

- Language: English

- Sponsor: JST CREST “An exploration of the principle of emerging interactions in spatiotemporal diversity”

Program

【Day 1】March 3rd (Sun.)

| 12:30 – | Site Open |

| 13:00 – 14:00 | Ichiro Tsuda A mechanism of the emergence of functional differentiation in the brain — a variational principle |

| 14:00 – 15:00 | Leslie M. Kay Olfactory brain dynamics support brain health |

| 15:00 – 15:30 | Coffee break |

| 15:30 – 16:30 | Peter Ford Dominey Emerging interactions between structural connectivity and narrative structure in reservoirs and brains |

| 16:30 – 17:30 | Yuji Kawai Functional differentiation in reservoir computing to learn long-term time series |

【Day 2】March 4th (Mon.)

| 9:00 – | Site Open |

| 9:30 – 10:30 | Guillaume Dumas Emerging interactions in humans and machines: from social physiology to social neuro-AI |

| 10:30 – 11:30 | Takashi Ikeda Visualizing parent-child relationships: A two-person neuroscience approach |

| 11:30 – 13:00 | Lunch break |

| 13:00 – 14:00 | Yasuo Kuniyoshi From embodiment to super-embodiment: An approach to open-ended and human aligned intelligence/mind |

| 14:00 – 15:00 | Alexandre Pitti Efficient codes for unreliable neurons and bodies |

| 15:00 – 15:30 | Coffee break |

| 15:30 – 16:30 | Robert Kozma Interpretation of emergent intermittent synchronization in brains as hallmarks of consciousness |

| 16:30 – 17:30 | Minoru Asada Embodiment, interaction, and large language models: Revisiting foundations of cognitive development |

Leaflet

Abstract

Ichiro Tsuda

Professor at Chubu University Academy of Emerging Sciences / Center for Mathematical Science and Artificial Intelligence, Chubu University, Japan

A mechanism of the emergence of functional differentiation in the brain — a variational principle

The purpose of the present talk is to show how brains develop while yielding functional neural units. Our approach is based on self-organization with constraints, which can be formulated by a typical but not usually-used variational principle.

Leslie M. Kay

Professor at Department of Psychology & Institute for Mind and Biology, The University of Chicago, Chicago, IL USA

Olfactory brain dynamics support brain health

Olfactory system neurophysiology challenges computational metaphors of brain function that rely on information transfer. Dynamics associated with smell stress the importance of system connectivity and distributed perceptual states that change with time and meaning. Olfactory system activity supports proper functioning of memory and affective state. Damage to the central olfactory system is implicated in neurodegeneration and dementia, and early reports post-COVID-19 suggest brain changes associated with the olfactory system that may progress to cognitive decline.

Peter Ford Dominey

Research Director at CNRS and INSERM U1093 CAPS, Dijon, France

Emerging interactions between structural connectivity and narrative structure in reservoirs and brains

Brain function can be characterized in part by the emerging interaction between structural connectivity and inherent structure in the input. This is revealed in a temporal processing hierarchy in reservoir models and in human brains, when connectivity is constrained by a distance rule. The resulting functional connectivity is further modulated by inherent structure in the narrative input, suggesting an input-driven reconfiguration of the computational substrate, for reservoirs and brains.

Yuji Kawai

Associate Professor at Symbiotic Intelligent Systems Research Center, Institute for Open and Transdisciplinary Research Initiatives, Osaka University, Japan

Functional differentiation in reservoir computing to learn long-term time series

Guillaume Dumas

Associate Professor at University of Montreal, Canada

Emerging interactions in humans and machines: from social physiology to social neuro-AI

Cognitive sciences have approached social cognition from two complementary perspectives. On the one hand, social psychology and behavioral economics have mostly emphasized mentalizing, social perception, and our ability to model other minds. On the other hand, developmental psychology and motor neuroscience have more focused on imitating, social interaction, and our propensity to coordinate with others’ behavior. This polarization leads to a « chicken-egg paradox » regarding the origin of social cognition in humans: while the former claim that we need to model others to interact with them; the later argue that we first need to interact with others to model their minds. Those two perspectives operate at different levels of explanation with different conceptual and mathematical formalisms. For instance, while Bayesian statistics captures social computations well during offline economic games, social coordination during sensorimotor coupling is better modeled using dynamical systems. In this talk, I will illustrate how we can operationalize a « social physiology » modeling human cognition as a multi-scale complex system that interfaces biological and social processes. The term « physiology » was introduced by Claude Bernard not as a medical subfield but rather as a systemic and integrative posture towards biological functions (1865). Here, we will expand this posture by including social functions, using experimental approaches in both natural and artificial agents. We will start with multi-brain neuroscience and how inter-brain connectivity provides a quantitative marker for bridging the gap between intra-personal mechanisms and inter-personal dynamics. Then, we will continue with neuro-inspired artificial intelligence and how the observations initially described in interactive social neuroscience can inspire architectures for virtual avatars and machine learning algorithms.

Takashi Ikeda

Associate Professor at Research Center for Child Mental Development, Kanazawa University, Japan

Visualizing parent-child relationships: A two-person neuroscience approach

In interactive situations, adaptive behaviors to the others emerge as a result of a connection between the perception-action loop within a person to each other. Many researchers have attempted to quantify the quality of interpersonal relationships through questionnaires and behavioral observations. However, the neural basis of interpersonal relationships remains unclear, especially in the parent-child relationships which is the most primitive dyad. A technique called hyperscanning has been used to investigate the neural basis of these adaptive behaviors. In the present study, parent-child pairs, including preschool and elementary school children, were selected as subjects and subjected to a synchronous tapping task. This talk will present a hyperscanning MEG experiment using a parent-child simultaneous tapping task and discuss its relevance to the questionnaire and behavioral observations.

Yasuo Kuniyoshi

Professor at Graduate School of Information Science and Technology, The University of Tokyo, Japan

Director of Next Generation Artificial Intelligence Research Center, The University of Tokyo, Japan

From embodiment to super-embodiment: An approach to open-ended and human aligned intelligence/mind

Embodiment is a key to solving the reliability and alignment issues in the current AI. This is because it imposes consistent constraints on the entire agent-environment interactions and accompanying information without specifying their actual contents, and the constraints are common to those with similar embodiment. The concept of embodiment should be generalized beyond mechanical properties of the body and information structure of sensory signals, encompassing internal organs and metabolism, mental processes and inter-agent interactions, to “super-embodiment”. It can address sensibilities, values and morals toward artificial humanity, which will be critically important for the next generation AI.

Alexandre Pitti

team NeuroCybernetics Professor

Laboratoire ETIS, CY Cergy Paris Université, ENSEA, CNRS, UMR8051

Efficient codes for unreliable neurons and bodies

While current Artificial Intelligence systems require unlimited data, time, and power resources to converge, infants learn very rapidly from just few samples only, highly informative knowledge with very limited resources and capabilities. We propose that infants follow the principle of entropy maximization enounced by Barlow, saying that one efficient code keeps the usefull information (entropy) and removes the useless ones (redundancy). By doing so, the brain may exploit advantageously its own complexity to continuously learn during lifetime, to optimize its storage capacity in terms of information (compression), to preserve memory over novel memory (catastrophic forgetting) and to rapidly retrieve old memories (reconstruction). Recently, I et colleagues successfully developed a neural network that satisfies Entropy Maximization in order to encode information. Despite the unreliability of neurons, this new associative memory has allowed the rapid learning of memories of very high resolution (with 1 billion time ratio) with few neurons only, and has been shown to not forget catastrophically when acquiring new information. We demonstrated that this type of encoding achieves memory capacities close to Shannon’s information theoretic limit; hence following Barlow hypothesis of efficient encoding by Information Maximization.

Robert Kozma

Department of Mathematics, University of Memphis, TN, USA

Graduate Research Center, Obuda University, Budapest, Hungary

Kozmos Research Laboratories, Boston, MA, USA

Interpretation of emergent intermittent synchronization in brains as hallmarks of consciousness

Spatio-temporal brain activity in humans and other mammals has identified beta/gamma oscillations (20-80 Hz), which are self-organized into spatio-temporal structures recurring at theta/alpha rates (4-12 Hz). The intermittent collapse of self-organized structures at theta/alpha rates generates laterally propagating phase gradients (phase cones), ignited at some specific location of the cortical sheet. The rapid expansion of essentially isotropic phase cones is consistent with the propagation of perceptual broadcasts postulated by Global Workspace Theory (GWT). This talk introduces the neuropercolation theory, which views brains as non-equilibrium thermodynamic systems operating at the edge of criticality, undergoing repeated phase transitions. Our work combines extensive brain monitoring results and cognitive, graph theoretical models. Work is in collaboration with B.J. Baars and N. Geld, expanding on W.J. Freeman’s neurodynamics principles.

Minoru Asada

Vice-President of International Professional University of Technology in Osaka, Japan

Strategic Adviser of Symbiotic Intelligent System Research Center, Open and Transdisciplinary Research Initiatives, Osaka University, Japan

Visiting Professor at Chubu University Academy of Emerging Sciences, Japan

PI at CiNet, National Institute of Information and Communications Technology, Japan

Embodiment, interaction, and large language models: Revisiting foundations of cognitive development

Physical embodiment and social interaction are traditionally viewed as cornerstones of Cognitive Developmental Robotics (CDR), guiding our understanding of human cognitive and affective development through computer simulations and robotic experiments. Central to this is language acquisition, traditionally believed to be unattainable without these physical and social dimensions. However, the emergence of advanced Large Language Models (LLMs) challenges this notion, demonstrating language understanding and generation capabilities without physical embodiment or traditional interaction. This talk delves into the implications of LLMs for our understanding of cognitive development, questioning and redefining concepts of agency, body ownership, and self-consciousness in artificial systems. We will explore how these findings not only redefine theoretical underpinnings in CDR but also open new avenues in robotics and AI, offering a fresh perspective on the parallels and divergences between human cognition and artificial intelligence.